At Mapbox, we've adopted Dagster without breaking compatibility with our legacy Airflow systems -- and with huge gains to developer productivity.

<img src="https://dagster.io/posts/incremental-adoption-mapbox/mapbox-logo-black.png" alt="Mapbox logo" width="384" height="86"

The Geodata teams at Mapbox are responsible for continually updating the map ofthe world that powers our developer data products and services.

Like many teams using Airflow, we found that continued development in Airflow was painful and costly.

Our data sources range from hard drives that get delivered from our vendors inthe mail once a month, to public-facing APIs that we poll every day, toreal-time feedback on thousands of addresses from the 700M end users who touchour maps every day.

We process this data using about a hundred different data pipelines and flowsto create maps, road network data for navigation, point of interest datasets,and search indices for addresses. The core of our address data processing isthe conflation engineand correction system, which combines over a billion addresses with road,parcel, and building datasets to get maximum coverage of existing addressesand calculate the most correct address points possible.

Mapbox has historically relied heavily on Airflow for orchestration, and manyteams, including ours, run their data pipelines on their own Airflow instances.

But like many teams using Airflow, we found that continued development inAirflow was painful and costly.

To fully test their orchestration code in Airflow, our engineers would typicallyneed to run a bunch of deploys to production, since setting up the dependenciesto run DAGs locally and ensuring that they were correctly configured was toocumbersome. This led to unacceptably slow dev cycles — not to mentionsignificant cost, since all our dev work had to be done on our productioninfrastructure.

We needed a solution that would allow for incremental adoption on top of our existing Airflow installation.

In an environment like ours, where the outputs of data pipelines arebusiness-critical, SLAs are strict, and many teams are involved in creatingdata products, we can’t stop the world and impose a new technology all at once.Luckily, Dagster comes with a built-in Airflow integration that makesincremental adoption on top of Airflow possible.

We started using Dagster because we needed to improve our developmentlifecycle, but couldn't afford to undertake a scratch rewrite of our existing,working pipeline codebase. Dagster lets us write pipelines using a clean setof abstractions built for local test and development. Then, we compile ourDagster pipelines into Airflow DAGs that can be deployed on our existingscheduler instances.

This is especially critical for us since our Dagster pipelines need tointeroperate with legacy Airflow tasks and DAGs. (If we were starting fromscratch, we would just run the Dagster scheduler directly.)

We started by writing new ingestion pipelines in Dagster, but we’ve since moved our core conflation processing into a Dagster pipeline.

Let's look at a simplified example, taken from our codebase, of what thislooks like in practice for normalizing, enriching, and conflating addressesin California.

Our Dagster solids make extensive use of Dagster's facilities for isolatingbusiness logic from the details of external state. For example, one solid,export_conflated, reads enriched and normalized addresses from the upstreamsolids and then conflates them into a single deduplicated set of addresses,taking the best address across multiple sources.

Python

The body of this solid is mostly Pyspark code, operating on tables that havebeen constructed by upstream solids. In a pattern that probably feels familiar,we constuct some source tables, join them, and then export them to a conflatedtable.

You'll note that we use Dagster's config schema to parametrize our businesslogic. So, for example, we can set export_csv_enabled to control whether a.csv of the output data frame will be exported or not.

We also use Dagster's resource system to provide heavyweight externaldependencies. Here we provide an conflate_emr_step_launcher resource and apyspark resource. By swapping out implementations of these resources, wecan control where and how our Pyspark jobs execute, which lets us controlcosts for development workflows.

Our step launcher is actually a fork of the open-source Dagster EMR steplauncher: we've modified it to handle packaged Airflow DAGs and dependencies,as well as to create ephemeral EMR clusters for each step rather thansubmitting jobs to a single externally managed EMR cluster. This wasstraightforward for us to implement, thanks to Dagster's pluggableinfrastructure.

What's really exciting about this is that our solid logic stays the sameregardless of where we're executing it. Solids are written in pure Pyspark, andthe step launcher implementation controls whether they execute in ephemeral EMRclusters or on our production infrastructure. The code itself doesn't changebetween dev and test.

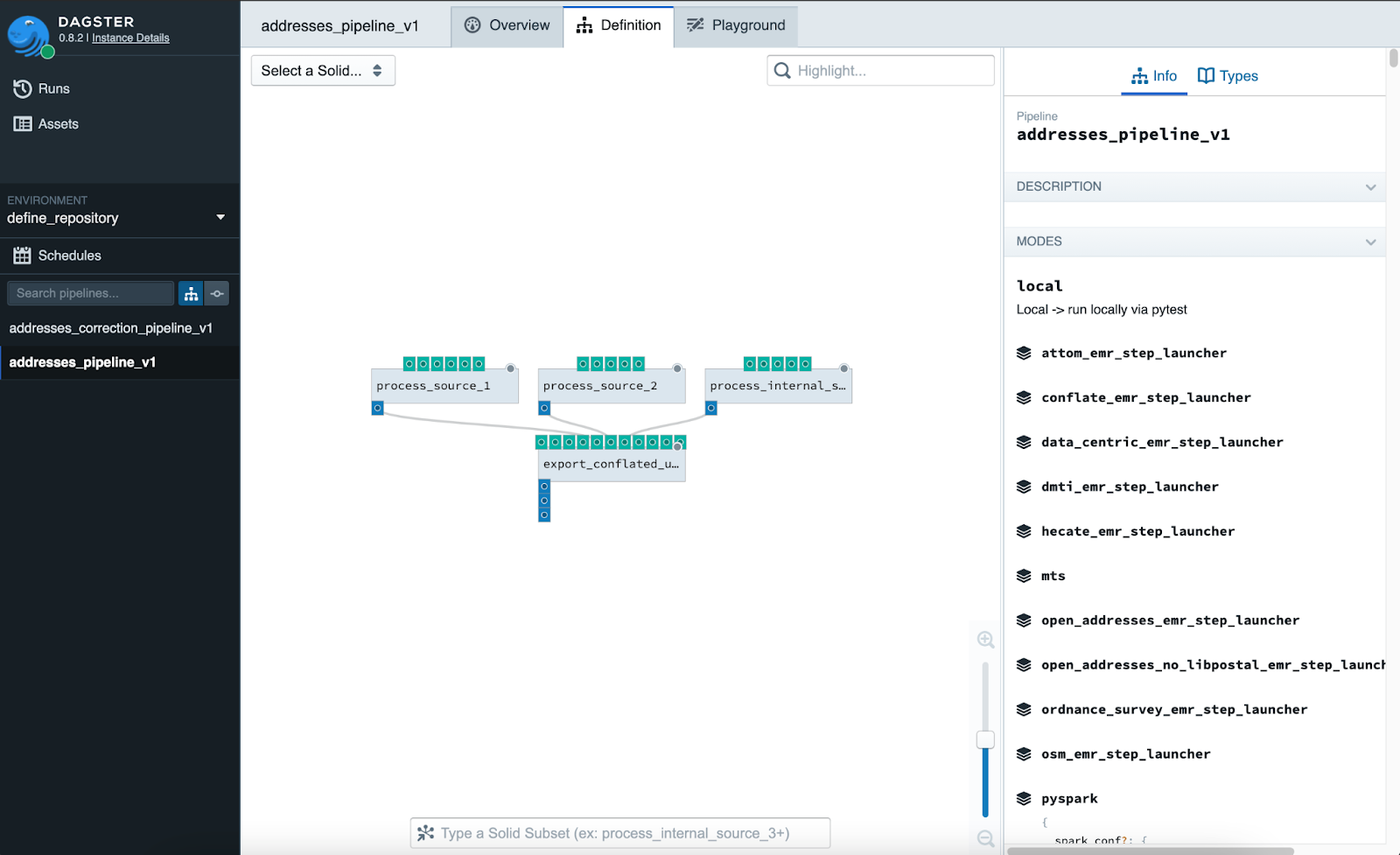

This means that as we're developing our solids, we can work with them in Dagitfor incremental testing and a tight dev cycle.

Developing Pyspark solids in Dagit

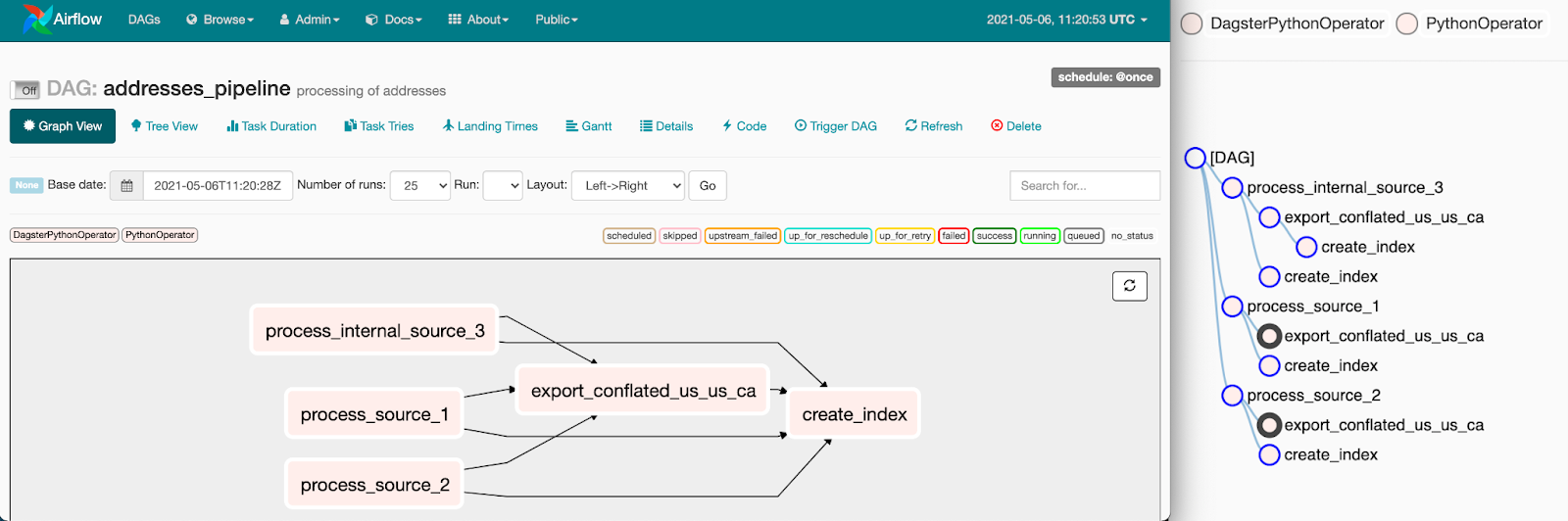

After we construct our Dagster pipelines from our solids, we compile them intoAirflow DAGs. Here, for example, we take a Dagster pipeline,repository_addresses_pipeline.addresses_pipeline_v1 (containing the examplesolid above). We compile it to Airflow usingdagster_airflow.factory.make_airflow_dag, and then edit the compiledtemplate to hook our new DAG up to an existing Airflow Task, create_index.

Python

In production, we do some even fancier things -- for instance, hooking up ourcompiled DAGs to our existing library of Airflow callbacks for custom alerting.

Integrating the Dagster solids into an existing Airflow DAG

Our compiled Dagster pipelines sit with all our other Airflow DAG definitions,and they're parsed into the DagBag and scheduled with all the rest.

That means we can develop and test a Dagster pipeline locally using Dagstertooling, including Dagit, and then monitor production executions and view thedependencies on Airflow tasks using Airflow.

We started adopting Dagster by writing new ingestion pipelines in Dagsterinstead of in Airflow, but we’ve since moved our core conflation processinginto a Dagster pipeline.

With Dagster, we've brought a core process that used to take days or weeks of developer time down to 1-2 hours.

This has made a huge difference for developer productivity. Just to give a senseof scale, one of the goals of this project was to reduce the human time ittakes to conflate all sources in a region (i.e. state or country) down to a dayor less -- this previously took days or weeks of human effort. With the newDagster pipeline and other performance improvements, we were able to reducethis to an average of 1-2 hours. This improvement in productivity was also seenin creating new ingestion pipelines for new address data sources.

With Dagster, developers can orchestrate their pipelines locally in test,swapping in ephemeral EMR clusters to test Spark jobs instead of running on ourproduction infrastructure. Because we can now run on dev-appropriateinfrastructure, testing is so much less costly -- more than 50% -- that in thefirst few weeks of this project our engineering manager was worried there wassomething wrong with our cost reporting.

Testing is so much less costly that our engineering manager was worried there was something wrong with our cost reporting.

Dagster has let us dramatically improve our developer experience, reduce costs,and speed our ability to deliver new data products -- while providing a pathfor incremental adoption on top of our existing Airflow installation and lettingus prove the value of the new technology stack without a scratch rewrite.

If the kind of work we're doing on the Mapbox data teams sounds interesting to you, Mapbox is hiring!